Meta learning is one of the most important pillars of next-gen analytics products. The models need to continuously update themselves to give high quality recommendations. The Decision Option Generator (DOG) – NAVIK AI suite’s proprietary algorithm for consolidating disparate individual model (Churn Propensity, Purchase Frequency, Collaborative Filtering etc.) outputs – is the umbrella recommender system for NAVIK MarketingAI and NAVIK SalesAI. DOG incorporates meta learning in the form of custom business rules in the model outputs. Thus, it comes up with appropriate recommendations that are devoid of plausible anomalies.

Need for Meta Learning in DOG

Due to the following emergent or volatile factors, NAVIK products constantly need to learn from themselves so they can adapt and update recommendations before the next model refresh cycle

- Turbulent market dynamics

In case of untoward macro-economic circumstances (like recession, digitization of currency, etc.), the user may want to discard recommendations that are not relevant to that state. - Mutating business needs and varying definitions of the sanity of recommendations

In case of a specific business focus over a certain period of time (e.g. phasing out sales of a particular product category, etc.), the user may want to discard recommendations that are not relevant to that state.

- Performance-based revaluation (appreciation/depreciation) of recommendations.

Some recommendations that are found productive/unproductive after they’re pitched to end customers need to be appreciated (or depreciated) for subsequent laps of recommendations.

- User discomfort with certain attributes of recommendations

A recommendation comprises many attributes: account, contact, product, time, channel of communication, rationale for recommendation, etc. If any of these attributes are not compatible with a sales pitch, the user may want to discard it and look for a fitting substitute.

Implementation of Heuristic Reinforcement Learning in DOG

To implement meta learning, a reinforcement multiplier, or a term multiplied to the final recommendation score, is employed in the DOG.

This ensures that it captures the following feedback, thus making the product a self-learning mechanism:

- Categorical feedback from user (e.g. discarding/restructuring recommendations)

- Automatic implicit feedback (e.g. changing the win rate of recommendations)

Illustration –Reinforcement Multiplier in NAVIK SalesAI’s DOG

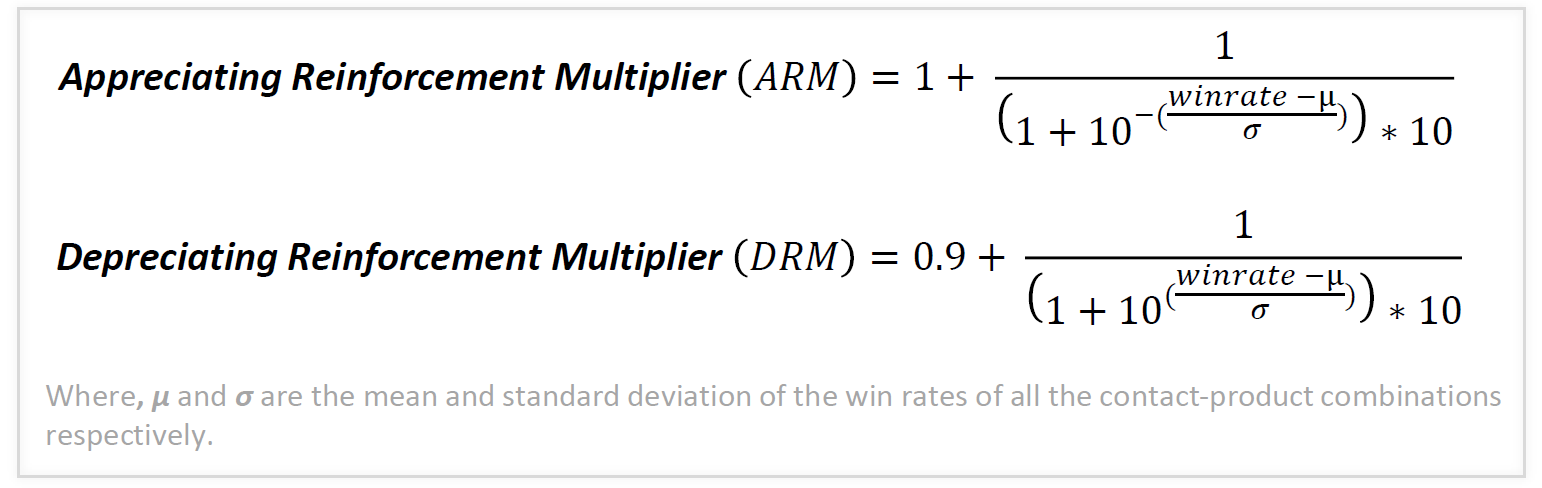

Appreciating and depreciating reinforcement multipliers are two mathematical functions of the contact-product-level win rates (#win/#pitches) of various opportunities. These are multiplied to the outcomes (e.g. the Contact Probability Score) of the corresponding constituent DOG model (e.g. Contact Lead Scoring) or the Consolidated Recommendation Score, determining the opportunities’ win and loss.

After every week, based on the win rates of the contact-product combinations, the ARM and DRM can be quantified with the aid of these formulae. However, the application of ARM/DRM differs with different triggers for reinforcement learning.

Recursion in Reinforcement Learning

Reinforcement learning, due to its very nature, learns from the user’s actions in a dynamic environment and gives feedback (in the form of rewards and punishments). You must be wondering how recursion additionally comes into the frame. Recursion, here, has nothing to do with the act of reinforcement per se, but with its degree. So, in other words, the ARM and DRM values will recursively evolve depending upon the parameters (like the win rate of contact-product combinations in NAVIK SalesAI recommendations or the user’s consecutive acceptance/rejection of recommendations) over time.

So, what tenet should the algorithm follow to recursively evolve? Firstly, the rate of recursion needs to be slow to offset any abrupt actions or chance events in the environment. Secondly, the degree of recursion can be defined subjectively.

After a considerable number of instances (> 500 laps of recommendation generation), the values on the reinforcement multiplier curves of the corresponding win rates of contact-product combinations will be auto-multiplied by the nth power of its complement; the curve will be updated accordingly. For instance, for higher win rates (0.9), the ARM applied to the recommendation score is again multiplied by (0.1)n. Here, the value of n can be between 0 to 3, depending upon how badly your business case warrants the reward/punishment of the positive/negative feedback.

This is just a simple adaptation of recursion in reinforcement learning. It makes meta learning altogether more powerful in its basic premise of learning to learn.

Technical articles are published from the Absolutdata Labs group, and hail from The Absolutdata Data Science Center of Excellence. These articles also appear in BrainWave, Absolutdata’s quarterly data science digest.