Vivid Visualization | Model Card for MLOps

Model cards are designed to give a clear, comprehensive picture of a Machine Learning model. A model card describes what the model does, who its target audience is, and how it is kept up. It also offers information about the model’s architecture and the training data that were used in its creation. A model card not only includes raw performance information but also contextualizes the model’s constraints and risk reduction options.

Model cards are documents that come with trained machine learning models and offer benchmarked evaluation in a range of circumstances. The context in which models are intended to be used, specifics of the performance evaluation processes, and other pertinent information are also disclosed on model cards.

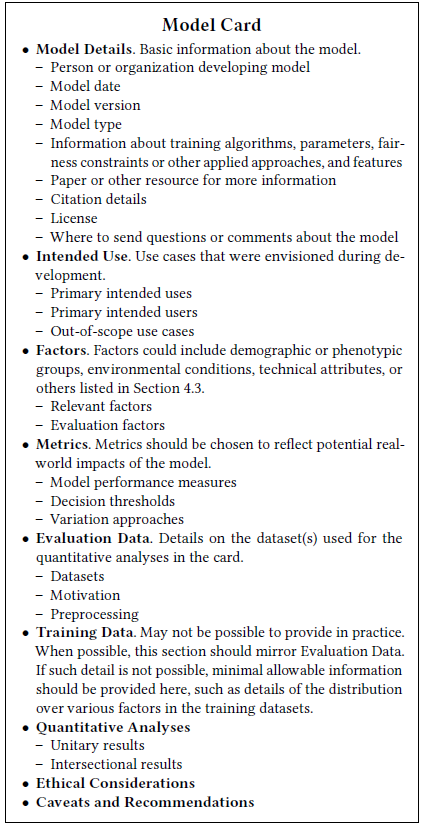

Model Cards Contain:

- Model Details – Answers basic inquiries about the model version, types, and other specific details.

- Person or organization developing model: What person or organization developed the model? Stakeholders can use this to deduce information about potential conflicts of interest and model development.

- Model Date – When was the model deployed?

- Model Version – Which version is deployed? How does it differ from previous model versions?

- Model Type – This includes basic models like ANN, logistics, etc. without any hyper-parameter tuning.

- Intended Use – What the model should and should not be used for and the purpose for creating it.

- Primary uses – Whether the model was created for general or specific tasks.

- Factors – A overview of model performance across a range of pertinent criteria (such as groups, instrumentation, and environment) should ideally be provided.

- Relevant factors – The most important variables that will affect how well a model performs.

- Evaluation factors – Which factors are being reported. Checks whether factors are the same (and if they are, why).

- Metrics – Reflects the model’s potential real-world effects. These primarily consist of model performance measures, decision thresholds, and the approach to uncertainty and variability.

- Evaluation Data – Documents that reveal the dataset’s origin and contents must be publicly accessible to all. These may be new datasets or older ones that are provided along with the model card evaluations to allow for additional benchmarking (i.e., what datasets are used, the reason for choosing specific datasets, if there’s any need to pre-process the data).

- Training Data – Generally, it contains as much information about the training data as the evaluation data. Nevertheless, it is quite possible this level of in-depth knowledge about the training data is not available.

- Quantitative Analysis – Displays the matric variation.

- Ethical Consideration – Presents stakeholders with examples of the ethical factors that were considered when developing the model. Major components include:

- Data

- Human life

- Mitigation

- Risk and harm

Source: Semantic Scholar

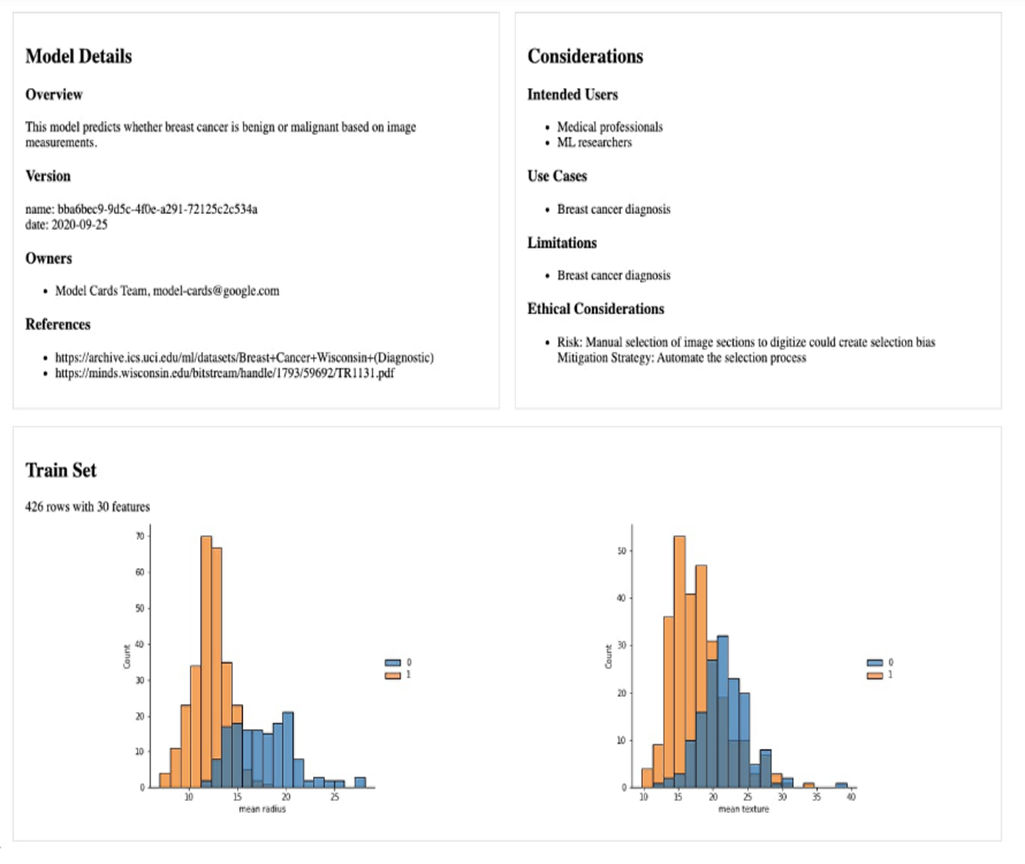

Diagram chart:

Source: Google Cloud

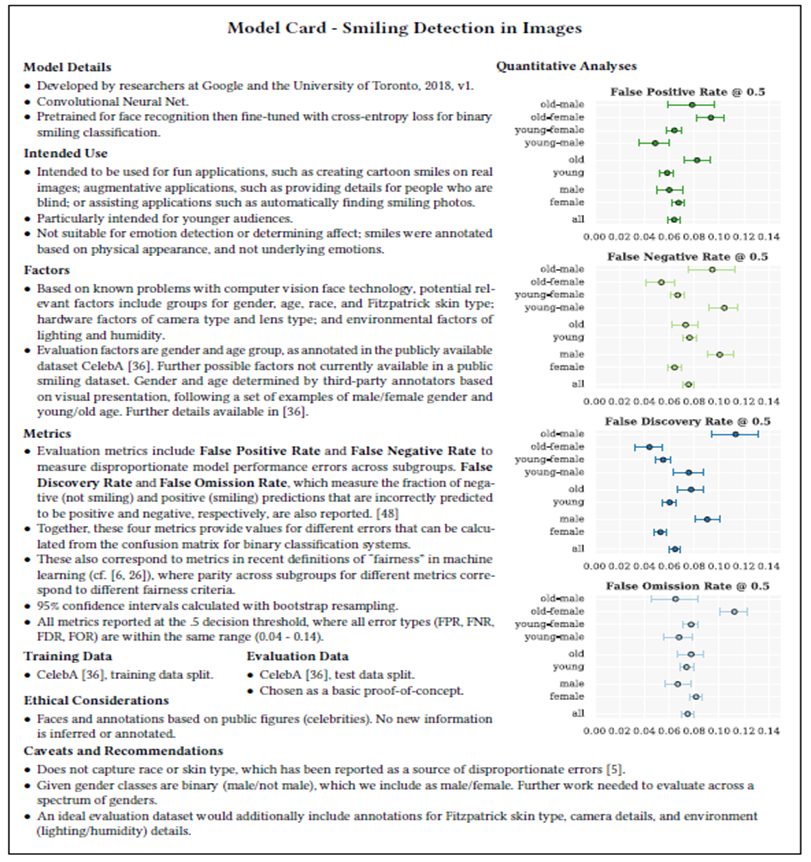

Source: Semantic Scholar

References

Margaret Mitchell et al.: Model Cards for Model Reporting

Google Cloud: About Model Cards

Authored by: Tejashvi Anand, Consultant at Absolutdata